Is machine able to talk about consciousness? Rigorous approach to

mind-body problem and strong AI

Victor Argonov, Ph D

(Vladivostok, Russian Academy of Sciences)

Summary of the results

Result 1 (rigorous): non-Turing test for machine consciousness is developed

1. Built an intelligent machine

1. Built an intelligent machine

2. Be sure that is has no sources philosophical knowledge (books,

discussions, numerical models of other particular creatures)

3. Detect its own ideas about the reality

• If it describes

correctly all philosophical problems of consciousness, then it is conscious,

and information-based materialism is true

• It it describes

correctly only some philosophical problems – interpretations are more difficult

Result 2 (non-rigorous):

scheme of artificial generator of philosophical ideas is suggested

We describe theoretically

the robot that is able to understand (without innate or external sources of knowledge)

several philosophical ideas, including the idea of soul. However, it seems to

be unable to understand the idea of qualia. Therefore, its consciousness is questionable,

and maybe qualia is the only true “hard” problem of consciousness

Citation:

Argonov V. Yu. Is machine able to talk about

consciousness? Rigorous approach to mind-body problem and strong AI // Towards

a science of consciousness 2011, P. 59

E-mail:

1. Introduction to

the problem

Basic views on the nature of consciousness

and the possibility of conscious computer:

• Substrate-based

materialism (conscious computer should be based on special physics)

• Information-based

materialism (any complex AI may be conscious )

• Dualism

(conscious computer is impossible or questionable)

Basic views on the function of

consciousness and the possibility of unconscious simulation of conscious

behavior (by “zombie”):

• Consciousness

has no function (Epiphenomenalism: any behavior may be simulated by

computer, consciousness is not manifested in behavior)

• Consciousness

has non-exclusive function (consciousness is manifested in behavior, but

conscious behavior may be simulated by unconscious system)

• Consciousness

has exclusive function (functionalism: consciousness is manifested

in behavior, and this behavior always requires consciousness)

Typical mistake:

Most tests for machine consciousness (Turing test variations) presume information-based materialism and exclusive function of consciousness (no unconscious simulation of which is possible). However, both presumptions are under question and their verification is required. Therefore, another test is required

2. Theory: non-Turing

test for machine consciousness (rigorous)

Principal fact

We are able to speak

about consciousness. Any discussion or book about consciousness is an objective

physical process. Therefore, maybe our ability to perform such discussions is

somehow related to the function of consciousness (Elitzur, Chalmers)

Definitions

• Phenomenal judgments (PJs) - words and writings about

consciousness in an objective form (oscillations of air, letters on paper)

• Verbally

reportable properties of consciousness (VRPCs) - properties of

consciousness, on which PJ content depends (properties of consciousness, in the

absence of which some PJs would not exist in their present form)

Examples of hypothetical VRPCs

• Qualia

(mental facts that we are not able to describe in details by words: redness,

sweetness etc.)

• Self-identity

(“me” is the same in different moments of time)

• Indivisibility (we

can’t imagine 2 or 1.5 instances of “me”)

Axioms

1. If all existing

VRPCs are of a physical nature, then materialism is true

2. If VRPCs do not

exist, then eliminative materialism is true

3. Unconscious system

is unable to describe problematic VRPCs without sources of knowledge about

consciousness

Theorem

If a deterministic computer having no innate or external sources of philosophical knowledge (including numerical models of other creatures) generates PJs on all problematic questions of consciousness,

then (1) information-based

materialism is true and (2) computer is conscious (if consciousness is not a

senseless term)

Proof (simplified)

(1) Let us assume

contrary: computer generates PJs but some VRPCs are immaterial. Deterministic machine

does not interact with immaterial things, and it is deprived from other sources

of information. Therefore, it is unable to describe immaterial VRPCs correctly.

(2) Machine is conscious according to axiom 3

3. Experimental

project of artificial phenomenal judgment generator (non-rigorous)

The robot is built

according to the following principles:

The robot is built

according to the following principles:

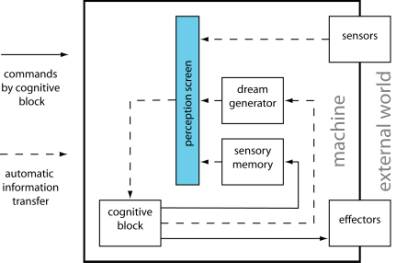

• Robots structure is shown in Fig. 2. Cognitive unit (unit that makes decisions and controls the effectors) gets the information from the perception screen and does not have explicit information about its initial source (sensors or dream generator).

• Robot

lives in a wild nature and has no ability to study own structure

• Robot

has the motivation to study input information and predict it

• Robot

has an ability to communicate with people and other robots, but nobody gives

him the information about its structure about and about philosophical problems

of consciousness

It

develops of the idea of the external world:

• At

initial time, the robot is a solipsist: perception is the

only reality he knows.

• Then,

the robot observes and analyses the correlations between his commands and his perception

and understands that the idea of external world

is useful for the prediction of

perception. Therefore, from the pragmatic viewpoint, external world exists

Then, robot understands the following philosophical and religious ideas:

• There is a

principal difference between perception screen and external world (directly vs

indirectly observed)

• Perception screen has unknown nature

(because robot is unable to find it in his body without special equipment)

• Perception screen is not equal to

the whole body (because some body parameters are not observed directly on the

perception screen)

• Perception screen may survive in the

destruction of the material structure of the machine (because it has unknown

nature and maybe even not a body part)

• Dreams may be a trip to other

realities (because robot has no ability to disprove this studying dream generator)

• Perception screen

may get another body (because it is

something different from the whole body )

The only exclusion:

The machine seems to be unable to

understand the idea of qualia: all perception may be sent to effectors

Possible

interpretation:

Robot is unconscious, and qualia is the

only “hard” problem of consciousness, while self-body relationship is just an “easy”

effect of functional algorithm. Maybe non-digital machine may have consciousness

and will be able to talk about qualia. Additional study is needed